- Word

Shannon information

- Image

- Description

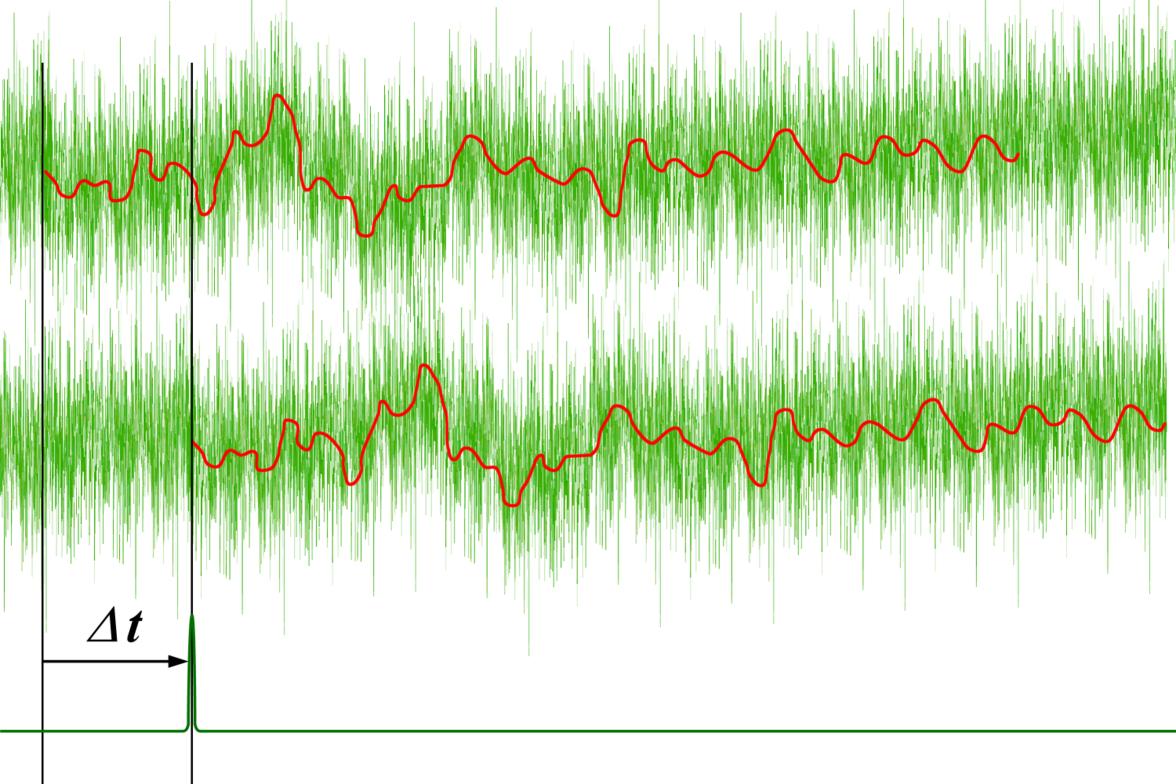

Shannon information, described by mathematician Claude Shannon, is a measure of the unpredictability of a message, given a message source, communication channel, and reciever. This unpredictability, or entropy, relates to the sum of the probabilities of each element in the message. The higher the entropy, the more information that is present.

- Topics

- Entropy, Information Theory

- Difficulty

- 1

- Sources

- []